Jim Savage

Jan 2017

This post describes how to conduct posterior predictive checking, and model comparison according to log posterior density. Before describing this, let’s quickly describe a few of the preliminaries.

Out of sample likelihood/log likelihood

Let’s say we have a very simple linear model of with a single covariate . , and are the model’s parameters.

How do we go about assessing out of sample fit in a probabilistic sense? One popular method is to take the (log) likelihood of some observations that weren’t used to train the model. What does this mean?

Let’s say we have our estimates , and and a new observation . Given the parameters and we know that the outcomes should be distributed as

Now we ask: what was the density at the actual outcome ?

The value of the predictive density at an observed outcome is the out of sample likelihood of a single datapoint. If we assume (conditional) independence of the draws, the out of sample likelihood of the full out-of-sample draw is the product of all the individual out of sample likelihoods .

You can probably see the problem here—for reasonably large spreads of the data, the value of the predictive density for a datapoint is typically less than 1, and the product of many such datapoints will be an extremely small number. This might give us numerical issues. One way around this is to take the log of the likelihood—the Log Likelihood. Because the log of numbers in (0, 1) are negative numbers large in absolute value, the sum of these numbers will also have a large absolute value, so we’ll not get numerical issues in computation.

So what does the out of sample log likelhood mean?

Log likelihood is a confusing concept to beginners as the values of the numbers aren’t easily interpretable. You might run the analysis and get a number of -3477 and ask “is that good? Bad? What does it mean?” These numbers are more meaningful when conducting model comparison, that is, we get the out of sample log likelihood for two different models. Then we can compare them. The usefulness of this approach is best illustrated with an example.

Imagine that we have an outlier—rather than as in the image above, it is -10. Under the proposed model, we’re essentially saying that the such an outcome is all but impossible. The probability of observing an observation that low or lower is 3.73^{-36}. The density of such a point would be 4.69^{-35}—a very small number. But its log is large in absolute value: -79.04. Compare that with the log likelihood of the original : -2.04. An outlier penalizes the log likelihood far more than a point at the mode.

This idea helps us do good modeling: we want to give positive weight to outcomes that happen, and no weight to impossible outcomes.

Model comparison with log likelihood

Now say we have a competing model, one with the same parameter estimates but with a Student t-distributed outcome, with a degrees of freedom parameter . Under this model, the log likelihood of with the same parameter estimates is -8.94—a big difference!.

Formally, so-called likelihood ratio tests specify a limiting distribution of the likelihood of two models (one should be a nested case of another, as in this one where the normal model is the same as the student T model with ) as the number of observations grows. If we have

Enter Bayes

There are two big issues with the sort of analysis above:

- When we have limited observations, we don’t want to rely on asymptotics.

- We are not certain about the values of our parameters, and we’d like the uncertainty to carry through to our model comparison.

By using Bayesian inference, we are able to avoid these issues in model comparison.

Posterior prediction and posterior density

In theory, our posterior is an abstract distribution; in practice, it’s a matrix of data, where each column corresponds to a parameter, and each row a draw from . For instance, the first five rows of our posterior matrix might look like:

| a | b | sigma |

|---|---|---|

| 1.90 | 0.54 | 0.93 |

| 2.04 | 0.58 | 1.05 |

| 1.91 | 0.50 | 0.92 |

| 2.19 | 0.46 | 0.97 |

| 1.97 | 0.51 | 1.01 |

Each of these parameter combinations implies a different predictive distribution. Consequently, they also imply different out-of-sample likelihoods for our out of sample datapoint . This is illustrated for 30 posterior replicates below.

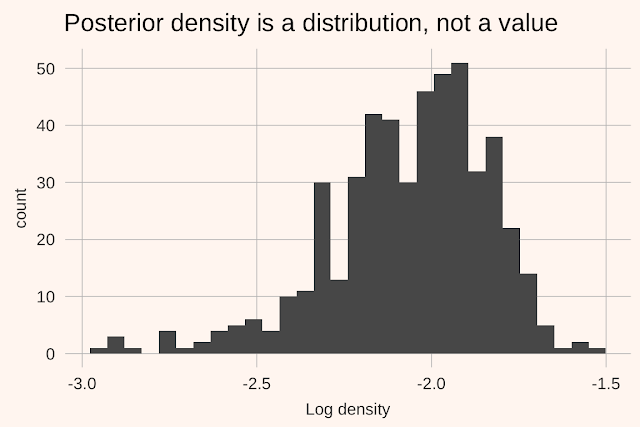

The density of the out-of-sample data across posterior draws is known as the (out of sample) log posterior density of a datapoint. Unlike the out of sample likelihood, we are uncertain of its value and need to perform inference. To illustrate this, let’s look at a histogram of the log posterior density in the above example (but with 500 replicates, not 30):

Note that the log posterior density can be evaluated for each datapoint; if we have many data-points for which we want to evaluate the LPD we simply need to evaluate it for each, and sum across all data-points.

Evaluating LPD

In rstanarm

In

rstanarm, log posterior density is calculated automatically, whenever a model is called. To extract the matrix of likelihoods, simply use:library(rstanarm)

lpd <- log_lik(your_stanreg_object)

In Stan

In Stan the process is slightly more involved, in that nothing is automatic—you need to generate your own log likelihood/LPD numbers. This is done in the generated quantities block. To implement this for the simple model above, we would use the Stan code:

generated quantities {

vector[N] log_lik;

for(i in 1:N) {

log_lik[i] = normal_lpdf(y_new[i] | a + b*x_new[i], sigma);

}

}

This will record the log posterior density of each observation

1:N across each posterior draw of a, b, sigma. We can then extract the LPD from a fitted stanfit object using:library(loo)

log_lik <- extract_log_lik(your_fitted_stan_model, "log_lik")

Note that the Stan implementaton above can be implemented for data that was not used to fit the model, which is why I’ve called the data we’re using to evaluate LPD

y_new and x_new.Posterior predictions

In the motivation for using log posterior density above, we noted that we want to take account of uncertainty in our parameter estimates in calculating the likelihood.

A similar idea is that when we want to generate predictions, we are not only concerned with the distribution of the data around the expected value (the predictive distribution). We’re interested in how the expected value itself might vary with uncertainty in the parameter estimates. And so for every out-of-sample datapoint, we generate posterior predictions—draws from the predictive distributions across draws of the parameters. In pseudocode:

for each posterior draw

for each observation of out of sample data

calculate expectation given posterior draw

draw from predictive distribution around the expectation

next

next

So in our predictive densities for the point from above, generating posterior draws would involve drawing a random number from each of these distributions .

These predictive draws automatically include the uncertainty both of the data distribution, but also of the parameters of that distribution. It is helpful to compare the posterior predictive density (black) for a large number of replicates against the true generative distribution (red):

They are the same. Yet for small samples, the uncertainty in the posterior predictive distribution is typically wider:

So why bother?

Using log posterior density and posterior predictive draws as a basis for model evaluation and comparison is good practice. When our model puts weight on outcomes that can’t occur, we want to know about it. When the model doesn’t put weight on outcomes that do, we want to know about it. And often just looking at these plots is enough to help you catch issues with your model. Especially for the big and complex models that I work with, getting a handle on how much uncertainty comes from our parameter estimates, and how much is due to the residual, is extremely helpful.

I should note here that this sort of checking helps us think about two important sources of uncertainty: parameter uncertainty and the model residual. But it gives only some guidance on whether the model is the right one. Many models with wildly different implications can provide similar support for the data, and only bootleather and deep thought can help you work out which to use.